NVMe over Fabrics (NVMe-oF) Definition

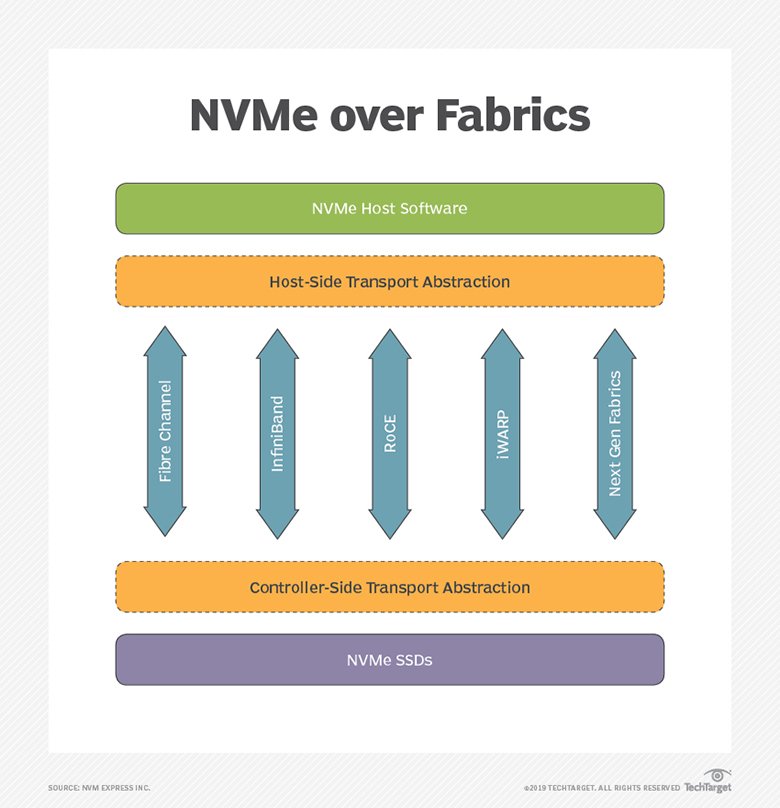

NVMe over Fabrics (NVMe-oF) is a technology specification designed to enable nonvolatile memory express message-based commands to transfer data between a host computer and a target solid-state storage device or system over a network, such as Ethernet, Fibre Channel (FC) or InfiniBand.

NVM Express Inc., a nonprofit organization comprising more than 100 member technology companies, published version 1.0 of the NVMe-oF specification on June 5, 2016. A workgroup within the same organization released version 1.0 of the NVMe specification on March 1, 2011. NVMe version 1.3, released in May 2017, added features to enhance security, resource sharing and solid-state drive (SSD) endurance.

Vendors are working to develop a mature enterprise ecosystem to support end-to-end NVMe-over Fabrics, including the server operating system (OS), server hypervisor, network adapter cards, storage OS and storage drives.

The NVM Express organization estimates that 90% of the NVMe-oF protocol is the same as the NVMe protocol, which is designed for local use over a computer’s Peripheral Component Interconnect Express (PCIe) bus.

Storage area network (SAN) switch vendors — including Brocade Communications Systems (now part of Broadcom Ltd.), Cavium Inc. (which acquired QLogic Corp. in 2016), Cisco Systems Inc. and Mellanox Technologies — are trying to position 32 Gbps FC as the logical fabric for NVMe flash.

NVMe over Fabrics vs. NVMe

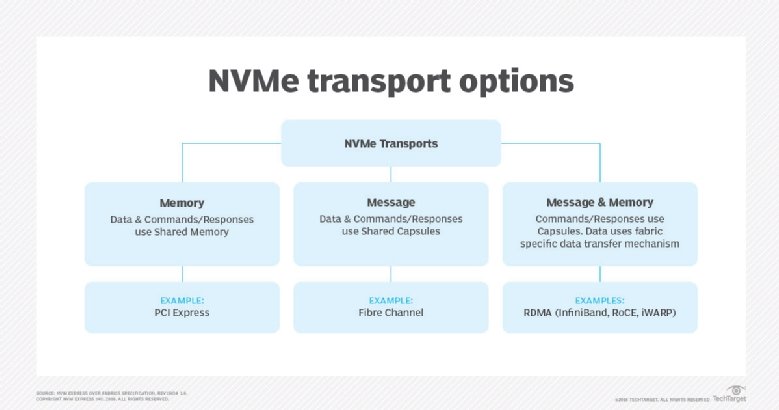

One of the main distinctions between NVMe and NVMe over Fabrics is the transport-mapping mechanism for sending and receiving commands and responses. NVMe-oF uses a message-based model for communication between a host and a target storage device. Local NVMe maps commands and responses to shared memory in the host over the PCIe interface protocol.

The NVM Express consortium defines NVMe-oF as a “common architecture that supports a range of storage networking fabrics for NVMe block storage protocol over a storage networking fabric. This includes enabling a front-side interface into storage systems, scaling out to large numbers of NVMe devices and extending the distance within a data center over which NVMe devices and NVMe subsystems can be accessed.”

NVMe-oF enables the use of alternate transports to PCIe that extend the distances across which an NVMe host device and NVMe storage drive or subsystem can connect. The original design goal for NVMe over Fabrics was to add no more than 10 microseconds of latency between an NVMe host and a remote NVMe target storage device connected over an appropriate networking fabric versus the latency of an NVMe storage device using a local server’s PCIe bus.

NVMe is an alternative to the Small Computer System Interface (SCSI) standard for connecting and transferring data between a host and a peripheral target storage device or system. SCSI became a standard in 1986, when hard disk drives (HDDs) and magnetic tape were the most common storage media. NVMe is designed for use with faster media, such as SSDs and post-flash memory-based technologies. The NVMe standard speeds access times by several orders of magnitude compared to SCSI and Serial Advanced Technology Attachment (SATA) protocols developed for rotating media.

NVMe flash evolved as a consequence of the growing use cases for high-performance flash drives. Traditional SCSI-based file commands sufficed when disk storage dominated in enterprises. But SCSI struggles to deliver the performance level that enterprises demand for scale-out storage.

In comparison to SCSI, the NVMe standard provides a more streamlined register interface and command set to reduce the CPU overhead of the input/output (I/O) stack. The streamlined NVMe command set uses less than half the number of instruction sets than other storage protocols. Benefits of NVMe-based storage drives include lower latency, additional parallel requests and higher performance.

NVMe supports 64,000 queues, each supporting a queue depth up to 64,000 commands. All I/O commands, along with the subsequent responses, operate on the same processor core, parlaying multicore processors into a high level of parallelism. I/O locking is not required, since each application thread gets a dedicated queue.

PCIe-connected NVMe SSDs allow applications to talk directly to storage. A PCIe 3.0 device with four lanes can deliver about 4 Gbps of bandwidth per device.

While it mirrors the performance characteristics of PCIe Gen 3, NVMe lacks a native messaging layer to direct traffic between remote hosts and NVMe SSDs in an array. NVMe-oF is the industry’s response to developing a messaging layer.

NVME over Fabrics using RDMA

NVMe-oF using remote direct memory access (RDMA) is defined by a technical subgroup of the NVM Express organization. Mappings available include RDMA over Converged Ethernet (RoCE) and Internet Wide-Area RDMA Protocol (iWARP) for Ethernet and InfiniBand.

NVMe over Fabrics using RDMA essentially requires implementing a new storage network that bumps up performance. The tradeoff is reduced scalability compared to what you get by using the FC protocol.

NVMe over Fabrics using Fibre Channel (FC-NVMe)

NVMe over Fabrics using Fibre Channel (FC-NVMe) was developed by the T11 committee of the InterNational Committee for Information Technology Standards (INCITS). FC allows the mapping of other protocols on top of it, such as NVMe, SCSI and IBM’s proprietary Fibre Connection (FICON), to send data and commands between host and target storage devices.

FC-NVMe and Gen 6 FC can coexist in the same infrastructure, allowing data centers to avoid a forklift upgrade.

Customers use firmware to upgrade existing FC network switches, provided the host bus adapters (HBAs) support 16 Gbps or 32 Gbps FC and NVMe-oF-capable storage targets.

The FC protocol supports access to shared NVMe flash, but there is a performance hit imposed to interpret and translate encapsulated SCSI commands to NVMe commands. The Fibre Channel Industry Association (FCIA) is helping to drive standards for backward-compatible FC-NVMe implementations, allowing a single FC-NVMe adapter to support SCSI-based disks, traditional SSDs and PCIe-connected NVMe flash cards.

Storage industry support for NVMe and NVMe-oF

Initial deployments of NVMe have been as direct-attached storage (DAS) in servers, with NVMe flash cards replacing traditional SSDs as the storage media. This arrangement offers promising performance gains when compared with existing all-flash storage, but it also has its drawbacks.

NVMe requires the addition of third-party software tools to optimize write enduranceand data services. Bottlenecks persist in NVMe arrays at the level of the storage controller.

NVMe-oF represents the next phase in the evolution of the technology, paving the way for the arrival of rack-scale flash systems that integrate native end-to-end data management. The pace of mainstream adoption will depend on how quickly across-the-stack development of the NVMe ecosystem occurs.

Established storage vendors and startups alike are jockeying for position. All-flash NVMe and NVMe-oF storage products include:

- DataDirect Networks Flashscale;

- Datrium DVX hybrid system;

- Kaminario K2.N;

- NetApp FAS arrays, including Flash Cache with NVMe SSD connectivity;

- Pure Storage FlashArray//X; and

- Tegile IntelliFlash (acquired by Western Digital Corp. in 2017).

In December 2017, IBM previewed an NVMe-oF InfiniBand configuration integrating its Power9 Systems and FlashSystem V9000, a product it said is geared for cognitive workloads that ingest massive quantities of data.

In 2017, Hewlett Packard Enterprise introduced its HPE Persistent Memory server-side flash storage using ProLiant Gen9 servers and NVMe-compliant Persistent Memory PCIe SSDs.

Dell EMC was one of the first storage vendors to bring an all-flash NVMe product to market. The DSSD D5 array was built with Dell PowerEdge servers and a proprietary NVMe-over-PCIe network mesh. The product was shelved in 2017 due to poor sales.

A handful of startups have also launched NVMe all-flash arrays:

- Apeiron Data Systems uses NVMe drives for media and houses data services in field-programmable gate arrays (FPGAs) instead of servers attached to storage arrays.

- E8 Storage uses its software to replicate snapshots from the E8-D24 NVMe flash array to attached branded compute servers, a design that aims to reduce management overhead on the array.

- Excelero software-defined storage runs on any standard servers.

- Mangstor MX6300 NVMe-oF arrays are based on Dell EMC PowerEdge outfitted with branded NVMe PCIe cards.

- Pavilion Data Systems has a branded Pavilion Memory Array built with commodity network interface cards (NICs), PCIe switches and processors. Pavilion’s 4U appliance contains 20 storage controllers and 40 Ethernet ports, which connect to 72 NVMe SSDs using the internal PCIe switch network.

- Vexata Inc. offers its VX-100 and Vexata Active Data Fabric distributed software. The vendor’s Ethernet-connected NVMe array includes a front-end controller, a cut-through router based on FPGAs and data nodes that manage I/O schedules and metadata.

Chipmakers, network vendors prep the market

Computer hardware vendors broke new ground on NVMe over Fabrics technologies in 2017. Networking vendors are waiting for storage vendors to catch up and start selling NVMe-oF-based arrays.

FC switch rivals Brocade and Cisco each rolled out 32 Gbps (Gen 6) FC gear that supports NVMe flash traffic, including FC-NVMe fabric capabilities. Also entering the fray was Cavium, refreshing the QLogic Gen 6 FC and FastLinQ Ethernet adapters for NVMe-oF.

Marvell introduced its 88SS1093 NVMe SSD controllers, featuring an advanced design that places its low-density parity check technology for triple-level cell (TLC) NAND flash devices running on top of multi-level cell (MLC) NAND.

Mellanox Technologies has developed an NVMe-oF storage reference architecture based on its BlueField system-on-a-chip (SoC) programmable processors. Similar to hyper-converged infrastructure, BlueField integrates compute, networking, security, storage and virtualization tools in a single device.

Microsemi Corp. teamed with American Megatrends Inc. to develop an NVMe-oF reference architecture. The system incorporates Microsemi Switchtec PCIe switches in Intel Rack Scale Design disaggregated composable infrastructure hardware running American Megatrends’ Fabric Management Firmware.

Among drive makers, Intel Corp. led the way with dual-ported 3D NAND-based NVMe SSDs and Intel Optane NVMe drives, which are based on 3D XPoint memory technology developed by Intel and chipmaker Micron Technology, Inc. Intel claims Optane NVMe drives are approximately eight times faster than NAND flash memory-based NVMe PCIe SSDs.

Micron rolled out its 9200 Series of NVMe SSDs and also branched into selling storage, launching the Micron Accelerated Solutions NVMe reference architecture and Micron SolidScale NVMe-oF-based appliances.

Seagate Technology introduced its Nytro 5000 M.2 NVMe SSD and started sampling a 64 TB NVMe add-in card.