EMC Announces Next-Generation VNX – Takes It Full Multicore

Virtualization as a Storage Driver

EMC has seen the greatest growth in the VNX line in its use as an engine for server virtualization. With the hyper-consolidation that server virtualization technology like VMware vSphere allows for, storage arrays become aggregators for tens, hundreds, and even thousands of virtual machines.

Designing storage for virtual environments isn’t always easy.

It can be difficult to plan for the simultaneous capacity and performance needs of the VMs. Many storage arrays are either capacity-optimized (EMC Isilon, for example) or performance-optimized (all-Flash arrays, for example), but virtualization environments need both.

Let’s look at some assumptions. Assuming that the average VM will use 100GB of space, and require anywhere from 50 to 100 IOPS, if you want to support 1,000 VMs on a single array, it will need to have 100TB of usable capacity while being able to sustain a 100,000 IOPS load at low latency. Want to support even more VMs? Your requirements grow as well. There aren’t a lot of arrays out there today that can meet these needs.

The Multicore Difference

To meet these needs, EMC redesigned its array software from the ground up. The new VNX’s use the Intel Sandybridge CPUs. With the ability to grow to 8 cores per socket, and support for 40 lanes of PCIe G3 per socket, there’s a lot of processing power and I/O bandwidth available — if one can take advantage of it.

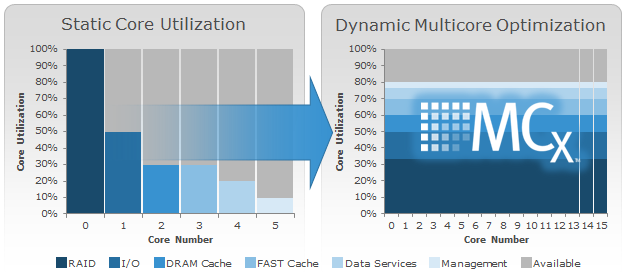

In the previous VNX, tasks got spread across cores, but it was still one task per core — not the most efficient use of the CPU resources available. What EMC has done with MCx is to rewrite the core OS functions to use massive hyperthreading, giving them the ability to make the most advantage of the multicore architecture.

You can get a sense for the difference in the graphic below. The left-hand side shows how tasks get spread across cores in the pre-MCx VNX, while the right-hand side shows how MCx handles processing.

Performance

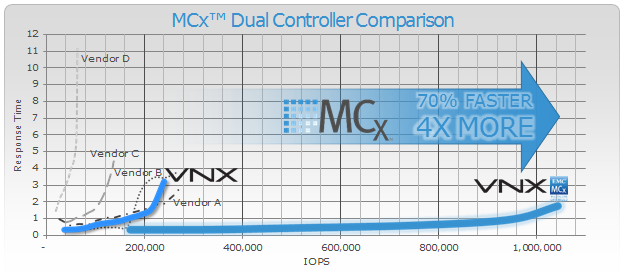

As you might expect, hyperthreading in this fashion affects the overall performance of the array, giving it a huge boost. Compared to the previous VNX, the MCx version offers:

- 6.3 X better caching

- 4.9 X more file IOPS

- 5.2 X more database transactions (on block storage)

Just providing a high number of IOPS isn’t enough, though — you also want to minimize latency. By using a combination of hyperthreading and caching algorithms, the MCx version of the VNX can provide sub-millisecond response time while under extremely high loads.

Capacity

Capacity

Since I’ve spoken to performance, let me briefly address capacity.

The new VNXs will support greater capacity than the previous version, mostly by supporting a higher maximum number of disk drives. However, that’s not all. The new generation adds block-based data deduplication as a background post-process job, giving the arrays an even higher effective capacity.

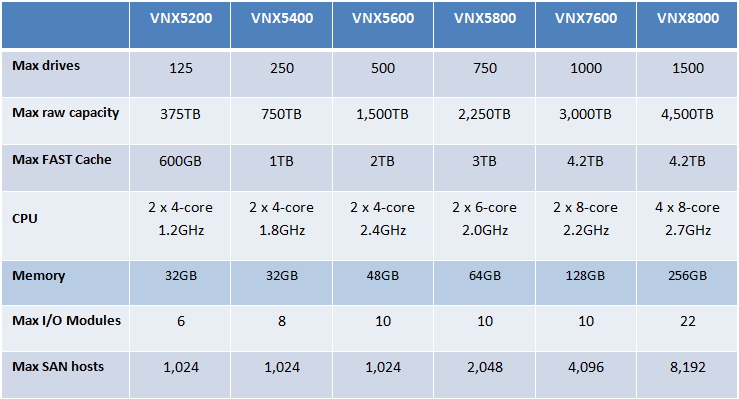

The Models

The new VNX models 5200, 5400, 5600, 5800, and 7600 will replace the current 5100, 5300, 5500, 5700, and 7500, respectively. Additionally, EMC is introducing the new “monster” in the family, the VNX8000. The 8000 will max out at 1,500 disk drives, 32 cores, and 256GB of RAM.

To go back to the earlier virtual machine assumptions (100GB of disk space and 100 IOPS per VM), the new models can provide the following support:

- VNX5400 – 1,000 VMs

- VNX5600 – 1,300 VMs

- VNX5800 – 1,700 VMs

- VNX7600 – 2,500 VMs

- VNX8000 – 6,600 VMs

And that’s all with thin provisioning turned on. Turn it off to free up some CPU cycles and the VNX8000 with support up to 8,000 VMs running simultaneously.

As is usual with EMC product launches, the older VNXs are still available and likely to remain so through the end of 2013, when I expect new ones to no longer be available for sale, although they’ll remain supported.

There is also talk of an MCx version of the VNXe (the VNX’s little brother), but that won’t be coming until 2014.

Specifications

Here’s a quick summary chart comparing the new models:

All drive access is via dual 6GB SAS 2.0 connections. Standard EMC SSD and HDD drives are supported in both 2.5″ and 3.5″ form factors. The HDDs supported include 15k, 10k, and 7.2k RPM drives. At this time, the largest drive supported is the 7.2k RPM 3TB drives. I expect that VNX will follow Isilon and offer support for 4TB drives in the future.

All drive access is via dual 6GB SAS 2.0 connections. Standard EMC SSD and HDD drives are supported in both 2.5″ and 3.5″ form factors. The HDDs supported include 15k, 10k, and 7.2k RPM drives. At this time, the largest drive supported is the 7.2k RPM 3TB drives. I expect that VNX will follow Isilon and offer support for 4TB drives in the future.

Availability

All of the new VNX models, with the exception of the VNX5200, have actually been available for purchase for the last month or so. (Yes, this product went GA before it was publicly announced…) The 5200 is expected to be available in Q4 of this year. To make up for its delay, EMC is offering some special promotional pricing on the next-up model, the VNX5400.

The VNX8000, while available today, is not yet available at full capacity. It initially supports a maximum of 1,000 drives and a maximum raw capacity of 3,000TB. I anticipate that a point release of the array’s OS will give it the full 1,500-drive support.